Research

The aim of this page is to formulate a possible solution to AI bias in motion tracking and outline a prototype that could help formulate ideas for more accurate and inclusive animated models produced through this motion tracking.

AI Learning: Can AI Learn the Difference Between People and Objects

The 3D animations created in Rokoko tell me that the motion-tracking AI doesn't just ignore the objects being moved, it doesn't process them at all. This means that every time an object appears on the camera that AI will not process it as existing within the scene. This means that if a moving object is in the way of the body being tracked, the AI will become confused and attempt to fill in the blanks, as seen in my hair-brushing model, where the AI replaced the brush with my hand to allow it to make sense.

During my time studying Digital Media and the Senses, we learned about AI and the way it could learn through a program called "Techable Machine". This AI can allow you to teach an AI the difference between people and objects on an extremely small scale. The massive limitations of this AI including the limit to what you can achieve with it tells me that it is not a good system to base my project off. I did create an extremely simplistic design in "Teachable Machine" before starting my research to represent a simplified version of what I wanted to create, however, I then went on to perform further research into other systems of AI learning and motion tracking to ensure my prototype was informed and up to the best quality. The "Teachable Machine" link to a highly simplified project is below:

This initial blueprint outlined in "Teachable Machine" helped me to keep my research focused on what I wanted to achieve, and I started my research into AI learning and motion tracking.

Different Types of Motion Capture

In order to fully research this project I decided to start where I recorded my animations, on Rokoko's official website. Here they talked about the different types of motion capture and what it's used for. Rokoko focuses on two different types of motion tracking:

Inertial Motion Tracking: Based on principles of inertial force where a sensor is physically placed on what is being tracked. It is based on physical elements rather than AI and is the type found when using motion capture suits.

Optical Motion Tracking: Uses light to track points of movement of the object. A camera sensor will use light to track the object. This is the type of motion tracking used to make my animations.

As Optical Motion Tracking was the type used to make my animations, this will be the type of motion tracking I will be focusing on for this project.

According to Rokoko, many high-budget studios take part in optical motion tracking through the use of multiple small cameras to properly capture the movement of people. This is great for high quality and people with larger budgets, but for the average person, this would be impossible, which leads to the use of AI optical tracking through the use of phone cameras and webcams.

Through further research, I also discovered that optical motion tracking was used outside of the entertainment industries, often being used to track movement in stores, on roads and out in public. This told me that motion-tracking AI is capable of tracking objects in certain circumstances. However, the capabilities of AI are still based on a variety of factors which continue to make AI biased against the outlined factors, with the tracking's reliance on light making it unreliable in darker scenarios.

Object Tracking VS Object Detection

When researching AI's that could track the movements of objects, I found that there are two different ways that AI can respond to objects.

Object Detection is when an AI is able to recognize and categorise an object into a particular class and is often used in applications such as face recognition or reverse image searches.

Object tracking is when the detected object gets tracked and monitored. It maintains the identity of the object across multiple frames even if it is out of view.

This research told me that to make a prototype for my project I would have to examine both object detection and object tracking to ensure that all elements were tracked to the fullest potential.

Since I used video-based object tracking for my animations, this is the type of tracking I will use in my prototype. This prototype will examine both object detection and tracking in order to have AI motion capture overcome its bias against non-human objects alongside preventing external biases such as colour and help gain more accurate animations as a result.

How does AI track people & objects?

When researching how to track people using AI I consistently was told three key steps to the AI motion tracking process:

Step 1: Detecting the Object - As mentioned above, this step is where the AI detects the object or person being tracked and categorises them into specific sections. This is done through an algorithm that can distinguish between different classifications and data.

Step 2: Unique ID Assignment - The prototype I want to create would be tracking both objects and people as separate classifications, and because of this the AI will need to be able to tell them apart. By assigning each element a unique ID, the AI will be able to follow specific objects and people within the scene.

Step 3: Motion Tracking - The tracker will estimate the positions of each detected object or person within the scene to track any movements they may make. This is the final step of the process.

To make my prototype, I must consider each of these factors in how AI tracks motion. I want my prototype to acknowledge that humans and objects are two separate entities, but that we work together to create motion. I want this prototype to be able to look at AI as something that can learn and examine how AI can become less biased through algorithms and technology.

Steps 1 and 3: Object Detection & Motion Tracking

As I thought about how to develop my prototype, I found that much of my project relied on machine learning to be successful. I would have to teach a machine how to recognize different objects and how to track them as they move. Because of this, I decided that to properly discover how a machine tracked objects and movement, I would have to build a machine myself. By using prior knowledge of machine learning and coding, I was able to follow a tutorial on how to make a motion-tracking AI in JavaScript along with how to get the AI to recognize different objects from each other. The tutorial used was created on YouTube by "The Coding Train": https://www.youtube.com/watch?v=QEzRxnuaZCk

I have linked the created AI below:

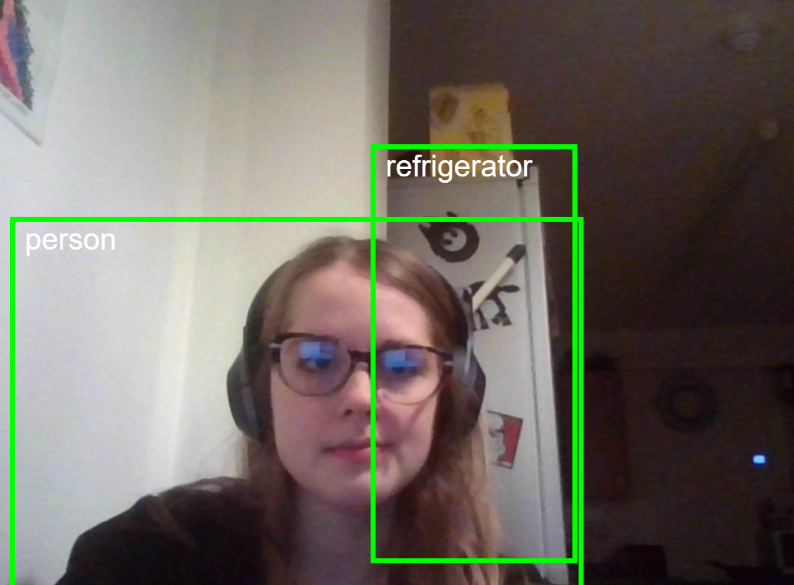

The AI created through this tutorial is able to detect the existence of a moving object along with tracking where that object is, showcasing the completion of Steps 1 and 3 of the motion detection process. However, I still needed to look into step 2 of the process, the classification of people and objects and how they are different. The AI created clearly can tell the difference between people and objects with some accuracy, however, this only fixes the discrimination against objects, and only to a certain extent, with the AI I created only being able to identify certain objects and not others, such as the glasses on my face. Because of this, I decided to do further research into the ID Assignments of objects in AI, starting with the classification system used within the AI I created.

COCO DataSet and the Identification of Non-Human Objects

The COCO Dataset is a series of over 100,000 images created with the intention of using for machine learning. Images would be gathered from the web, various objects would be identified and highlighted for the AI to learn from and these images would go on to teach the AI to separate the subject of the photo from the others. For example, if trying to teach a machine to identify a dog, the creator would gather multiple dog images of multiple breeds to help the AI tell the difference between the different types of breeds and identify them as the same animal.

The COCO dataset is broad and helpful when identifying everyday objects for the AI. However, the dataset is flawed when attempting to identify objects needed for medical reasons, with no search results appearing when attempting to find wheelchairs and crutches, making it biased against those with physical disabilities. Furthermore, I noticed when looking at the photos used on the "person" identification of the dataset that rather than identifying people based on their features, the dataset identified them based on shape, using outlines to show what a human looked like. This means that it is likely that the AI is biased against colour, with past studies proving that AI struggles more to identify darker skin tones. Because of this, I learned how AI can be programmed to identify objects based on their shape to assign them a specific ID, showcasing step 2 of the object detection process.

The research done into object detection and motion tracking was highly useful in helping me discover how IA tracks our movement through a three-step system, all of which are important for the creation of an accurate body-tracking AI. Not only this, but I now have a better insight into why AI may be biased against certain people and objects, which will help me to develop a prototype to overcome these biases.